Chapter 6: Graphs and Trees

Section 6.4 Huffman Codes

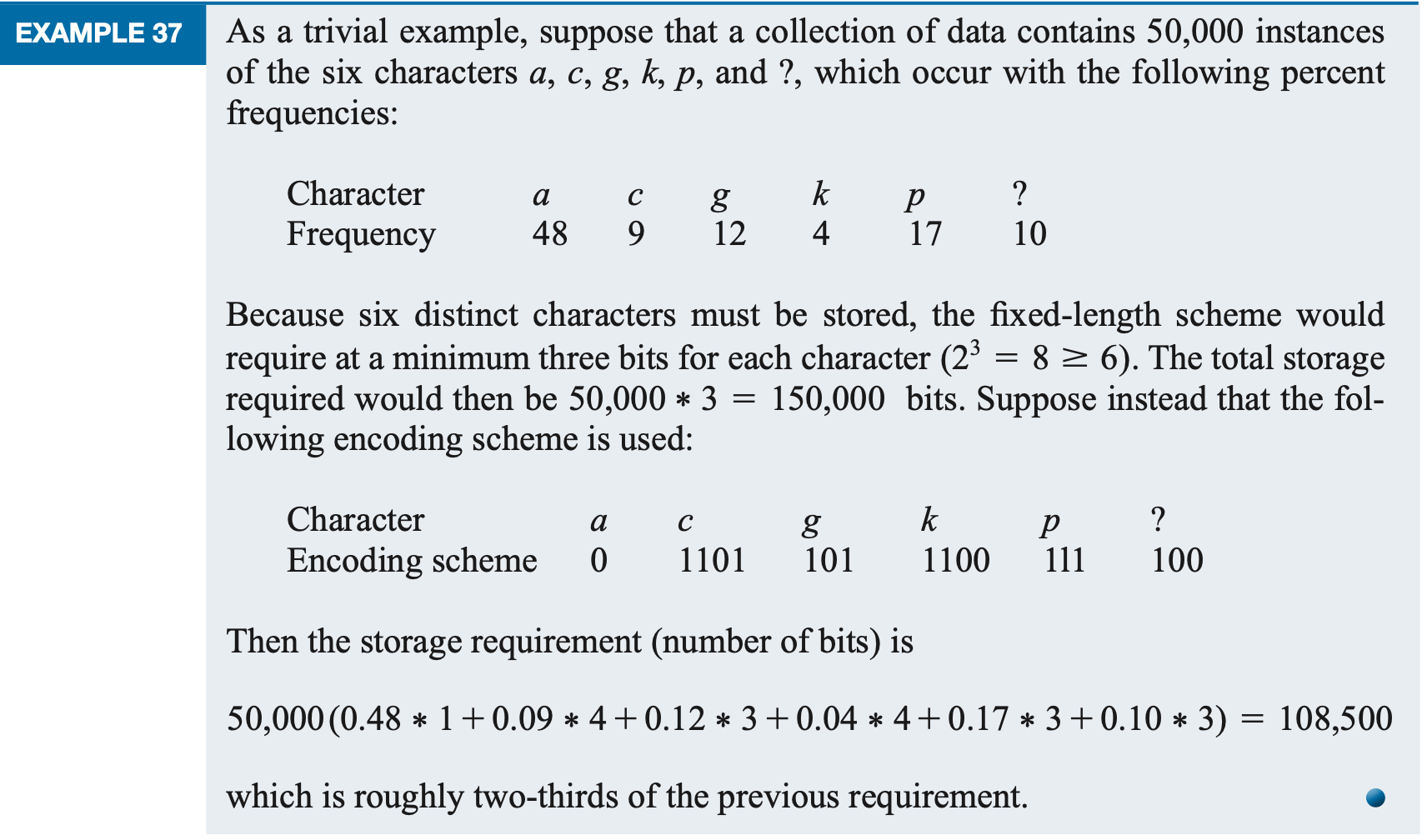

Huffman coding is a method of data compression that assigns shorter binary codes to more frequent characters and longer codes to less frequent ones. The main idea is to use variable-length codes rather than fixed-length codes, so the overall size of the encoded data is minimized.

Huffman coding is lossless, meaning you can perfectly reconstruct the original data, no data is lost during compression. It’s often used in file compression formats, like ZIP, and in multimedia codecs to reduce file size, with more advanced methods applying Huffman coding alongside additional preprocessing steps to make compression more efficient and robust than the classic static approach.

Huffman Codes

Character data consist of letters of the alphabet (both uppercase and lowercase), punctuation symbols, and other keyboard symbols such as @ and %. Computers store character data in binary form, as a sequence of 0s and 1s, usually by fixing some length n so that \(2^n\) is at least as large as the number of distinct characters and then encoding each distinct character as a particular sequence of n bits. Each character must be encoded into its fixed binary sequence for electronic storage, and then the binary sequence must be decoded when the character is to be displayed. The most common encoding scheme for many years was ASCII (American Standard Code for Information Interchange), which uses n = 8, so that each character requires 8 bits to store. However, \(2^8 = 256\), so a maximum of 256 characters could be encoded. This was enough for the English alphabet, punctuation, and special characters, but as electronic data storage spread around the world, it was not enough to include characters found in other languages such as Russian, Japanese, Arabic, Greek, and many others. Unicode (in general) uses 16 bits to encode a single character, so that \(2^{16} = 65536\) character encodings are now available. But whatever value is chosen for n, each character requires the same amount of storage space.

Suppose a collection of character data to be stored in a file in binary form is large enough that the amount of storage required is a consideration. Suppose also that the file is archival in nature, and its contents will not often be changed. Then it may be worthwhile to invest some extra effort in the encoding process if the amount of storage space required for the file could be reduced.

Rather than using a fixed number of bits per character, an encoding scheme could use a variable number of bits and store frequently occurring characters as sequences with fewer bits. To store all the distinct characters, some sequences will still have to be long, but if the longer sequences are used for characters that occur less frequently, the overall storage required should be reduced. This approach requires knowledge of the particular file contents, which is why it is best suited for a file whose contents will not be frequently changed. We will study such a data compression or data compaction scheme here, because it is best described as a series of actions taken on binary trees. Huffman codes are a basic technique for doing data compression.

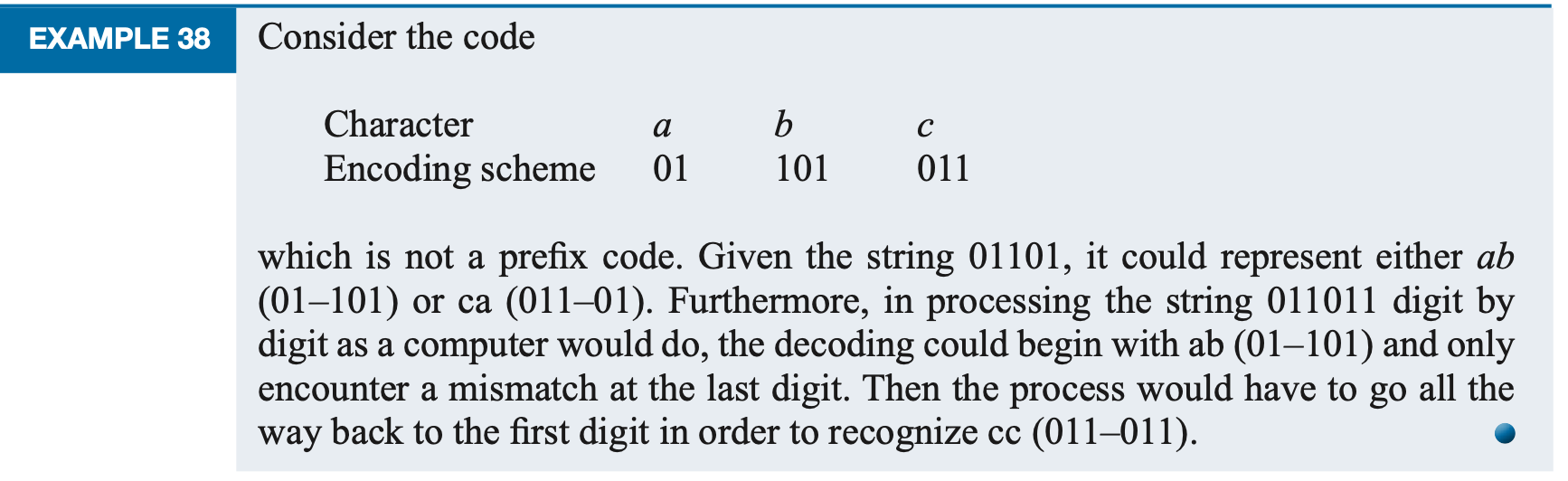

In the fixed-length storage scheme with n bits for each character, the long string of bits within the encoded file can be broken up into the code for successive characters by simply looking at n bits at a time. This makes it easy to decode the file. In the variable-length code, there must be a way to tell when the sequence for one character ends and the sequence for another character begins.

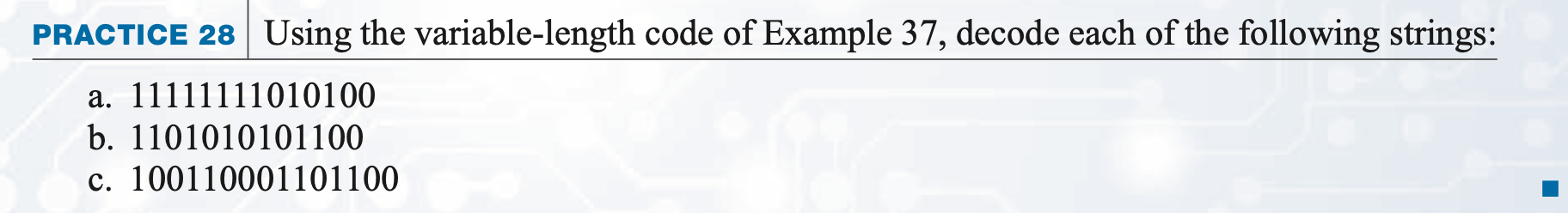

In Practice 28 the strings can be broken into the representation of characters in only one way. As each new digit is considered, the possibilities are narrowed as to which character is being represented until the character is uniquely identified by the end of that character's representation. There is never any need to guess at what the character might be and then backtrack if our guess proves wrong. This ability to decode uniquely without false starts and backtracking comes about because the code is an example of a prefix code . In a prefix code, the code for any character is never the prefix of the code for any other character. (A prefix code is therefore an "antiprefix" code!)

Huffman Encoding Algorithm

Huffman encoding is a type of prefix code, which means no encoded character (or symbol) is a prefix of another. This property ensures that the resulting bitstream can be decoded and encoded unambiguously.In the context of Huffman coding, encoding and decoding are the two main processes involved in compressing and reconstructing data:

- 1. Encoding (Compressing the Data)

- ♣ Each symbol/character in the original data is replaced with its binary code from the Huffman tree.

- ♣ The original data is transformed into a compressed sequence of binary bits.

- 2. Decoding (Reconstructing the Original Data)

- ♣ The decoder uses the same Huffman tree to interpret the bitstream.

- ♣ The binary sequence is transformed back into the original data.

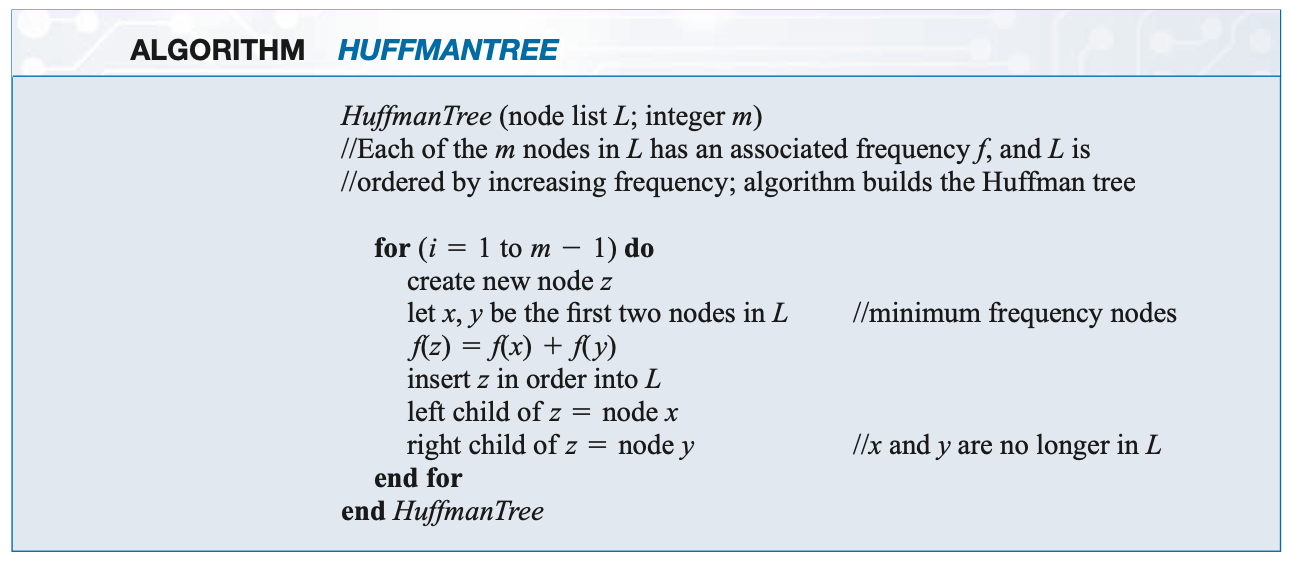

In our approach to prefix codes, we will build binary trees with the characters as leaves. Once the tree is built, a binary code can be assigned to each character by simply tracing the path from the root to that leaf, using 0 for a left branch and 1 for a right branch . Because no leaf precedes any other leaf on some path from the root, the code will be a prefix code.

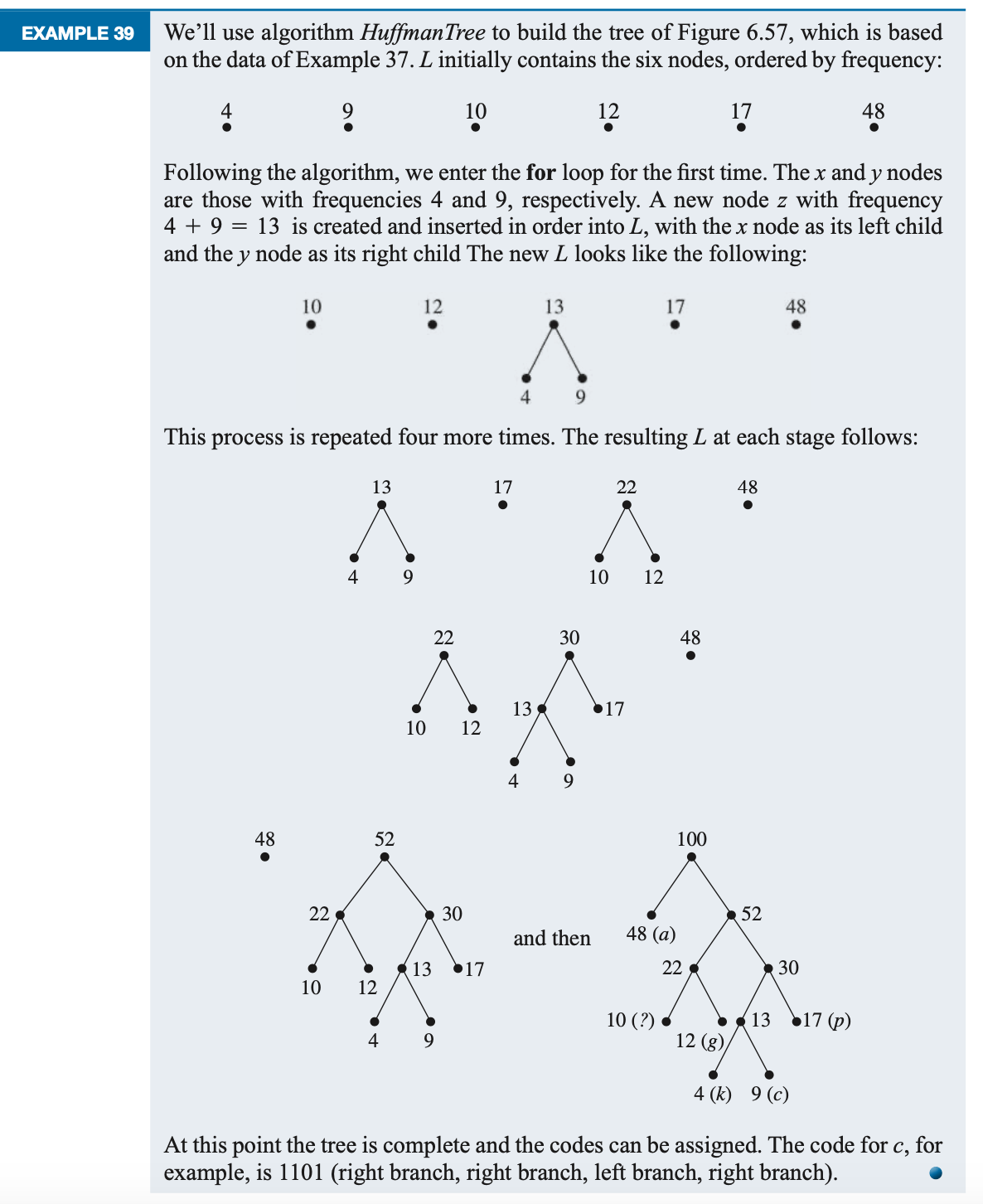

1. Create tree nodes containing the character and the frequenceis.

2. Sort based on frequencey-ascending

3. Take first two nodes in the list and create a parent node with the first node as the left child and the second node as the right child. The parent frequency is the sum of the two children frequencies. The children are removed from the list and parent is left in the list.

4. repeat steps 2 and 3 until there is only one node in the list.

Once the tree is built, a binary code can be assigned to each character by simply tracing the path from the root to that leaf, using 0 for a left branch and 1 for a right branch .

a: 0

c: 1101

g: 101

k: 1100

p: 111

?: 100

Using the above code, we can decode: 110111111001000

the result would be : cpk?a

Or encode: cpag?

the result would be: 11011110101100

Practice

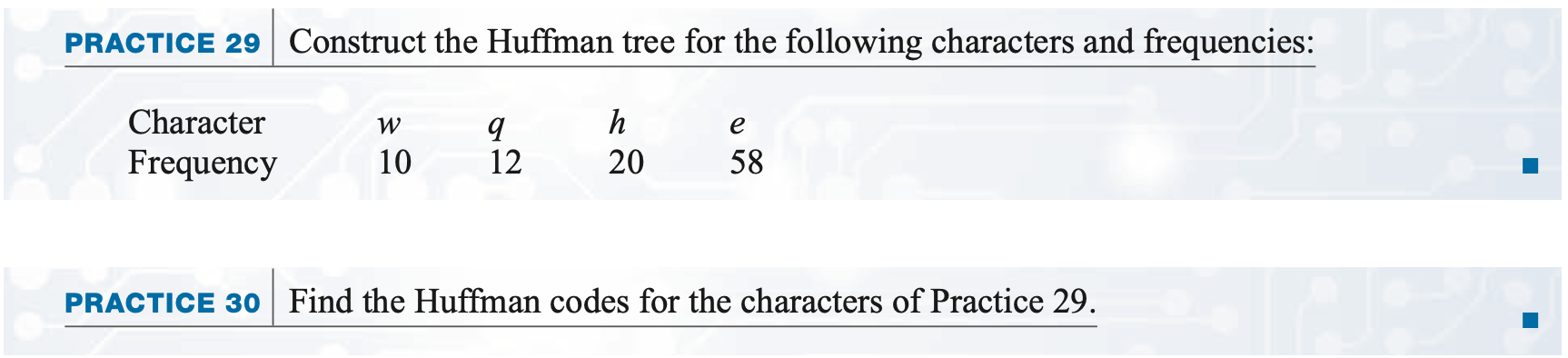

1.

(2)Please find the Huffman code for each character.

(3) A file consisting of 10,000 instances of these four characters is stored using the ASCII code encoding scheme. How many bits are required for each character? and what is the toal number of bits needed for this file?

(4) Storing the same file using using a fixed-length binary encoding scheme. How many bits are required for each character? and what is the toal number of bits needed for this file?

(5) Storing the same file using the Huffman code find in (2), how many bits are needed in total?

2. File of 50,000 DNA characters(i.e., each of the characters is one of A, C, T, or G. Assume you have the following percentages of each

letter A C T G

frequency 22 28 22 28

Encoding the characters using extended ASCII (8 bits per character) produces:

50000*8 = 400,000 bits of data (1) Please encode the DNA characters using huffman code. Please construct a huffman tree.

(2) How many bits are needed for storing the file of 50,000 DNA characters using the Huffman code of (1)?

(3) Using the huffman code, please decode: 101110110001

(4) Using the huffman code, please encode: ATATCG

Application of Huffman Codes

1. ZIP file format (lossless)

- ♣ ZIP = LZ77 + Huffman (via DEFLATE)

- ♣ Inside ZIP, the DEFLATE algorithm is applied:

- LZ77 first reduces repeated strings/patterns.

- Huffman coding compresses the result based on symbol frequency.

2. JPEG (lossy)

- ♣ JPEG image compression is mostly lossy, but it does use Huffman coding as a final step.

- ♣ JPEG pipeline:

- Convert image to YCbCr color space.

- Apply DCT (Discrete Cosine Transform) to blocks of pixels (lossy step)

- Quantization (major source of loss).

- Convert quantized DCT coefficients to a zig-zag order.

- Run-Length Encoding (RLE) on the zig-zag coefficients.

- Huffman coding applied to the RLE output for entropy coding.